1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

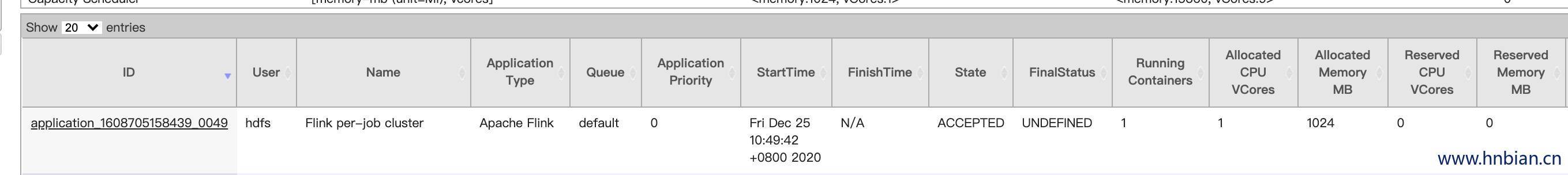

| [hdfs@node2 flink-1.10.2]$ ./bin/flink run -m yarn-cluster ./examples/batch/WordCount.jar

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/flink-1.10.2/lib/slf4j-log4j12-1.7.15.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.0.0-78/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2020-12-25 10:49:01,986 INFO org.apache.flink.yarn.cli.FlinkYarnSessionCli - Found Yarn properties file under /tmp/.yarn-properties-hdfs.

2020-12-25 10:49:01,986 INFO org.apache.flink.yarn.cli.FlinkYarnSessionCli - Found Yarn properties file under /tmp/.yarn-properties-hdfs.

2020-12-25 10:49:02,457 WARN org.apache.flink.yarn.cli.FlinkYarnSessionCli - The configuration directory ('/opt/flink-1.10.2/conf') already contains a LOG4J config file.If you want to use logback, then please delete or rename the log configuration file.

2020-12-25 10:49:02,457 WARN org.apache.flink.yarn.cli.FlinkYarnSessionCli - The configuration directory ('/opt/flink-1.10.2/conf') already contains a LOG4J config file.If you want to use logback, then please delete or rename the log configuration file.

Executing WordCount example with default input data set.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

2020-12-25 10:49:03,717 INFO org.apache.hadoop.yarn.client.RMProxy - Connecting to ResourceManager at node1/192.168.0.39:8050

2020-12-25 10:49:04,377 INFO org.apache.hadoop.yarn.client.AHSProxy - Connecting to Application History server at node2/192.168.0.38:10200

2020-12-25 10:49:04,423 INFO org.apache.flink.yarn.YarnClusterDescriptor - No path for the flink jar passed. Using the location of class org.apache.flink.yarn.YarnClusterDescriptor to locate the jar

2020-12-25 10:49:04,809 INFO org.apache.hadoop.conf.Configuration - found resource resource-types.xml at file:/etc/hadoop/3.1.0.0-78/0/resource-types.xml

2020-12-25 10:49:04,891 WARN org.apache.flink.yarn.YarnClusterDescriptor - Neither the HADOOP_CONF_DIR nor the YARN_CONF_DIR environment variable is set. The Flink YARN Client needs one of these to be set to properly load the Hadoop configuration for accessing YARN.

2020-12-25 10:49:05,071 INFO org.apache.flink.yarn.YarnClusterDescriptor - Cluster specification: ClusterSpecification{masterMemoryMB=1024, taskManagerMemoryMB=1728, slotsPerTaskManager=1}

2020-12-25 10:49:07,172 WARN org.apache.hadoop.hdfs.shortcircuit.DomainSocketFactory - The short-circuit local reads feature cannot be used because libhadoop cannot be loaded.

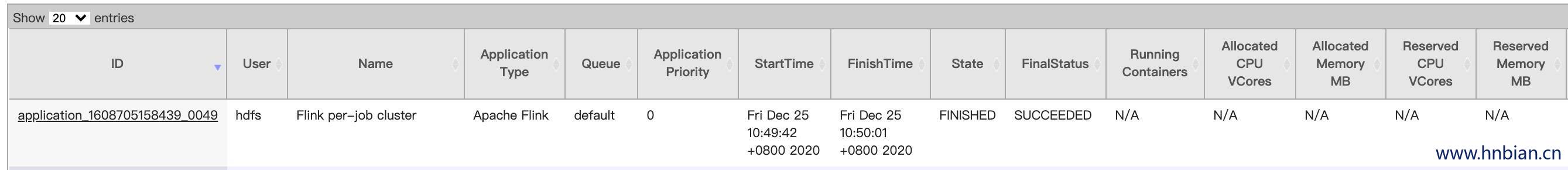

2020-12-25 10:49:12,476 INFO org.apache.flink.yarn.YarnClusterDescriptor - Submitting application master application_1608705158439_0049

2020-12-25 10:49:12,774 INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl - Submitted application application_1608705158439_0049

2020-12-25 10:49:12,775 INFO org.apache.flink.yarn.YarnClusterDescriptor - Waiting for the cluster to be allocated

2020-12-25 10:49:12,777 INFO org.apache.flink.yarn.YarnClusterDescriptor - Deploying cluster, current state ACCEPTED

2020-12-25 10:49:19,073 INFO org.apache.flink.yarn.YarnClusterDescriptor - YARN application has been deployed successfully.

2020-12-25 10:49:19,074 INFO org.apache.flink.yarn.YarnClusterDescriptor - Found Web Interface node3:35416 of application 'application_1608705158439_0049'.

Job has been submitted with JobID 505a14cbbf0cca7cad029ed90fe048eb

Program execution finished

Job with JobID 505a14cbbf0cca7cad029ed90fe048eb has finished.

Job Runtime: 10932 ms

Accumulator Results:

- 7fbe82214da97e7cbb5a60d0890bdbdb (java.util.ArrayList) [170 elements]

(a,5)

(action,1)

(after,1)

(against,1)

(all,2)

(and,12)

(arms,1)

(arrows,1)

(awry,1)

(ay,1)

(bare,1)

(be,4)

(bear,3)

(bodkin,1)

(bourn,1)

(but,1)

|