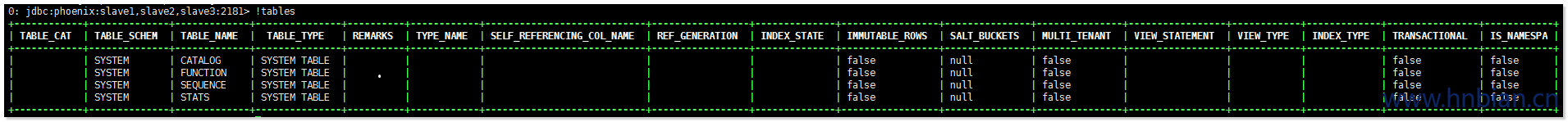

1. 查看表 1 0: jdbc:phoenix:slave1,slave2,slave3:2181> !tables

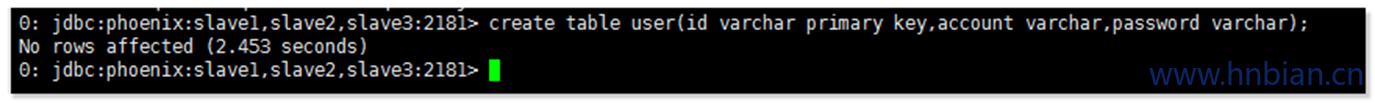

2. 创建表 1 2 # 创建user 表 create table user (id varchar primary key ,account varchar ,password varchar );

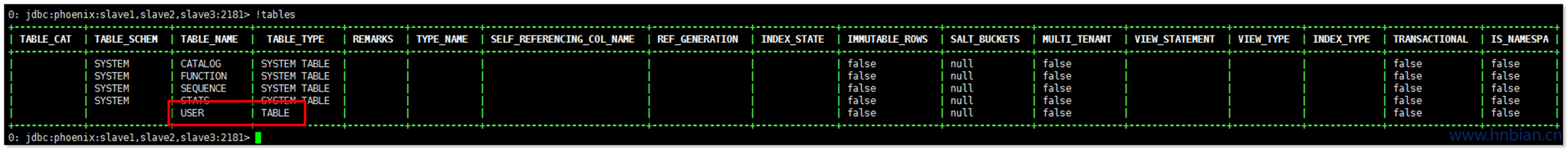

1 2 0: jdbc:phoenix:slave1,slave2,slave3:2181> !tables

Phoenix会自动将表名和字段名转换为大写字母,如果不想转换的话可以使用双引号括起来, 如下 sql

1 2 3 4 5 6 7 8 9 10 11 create table "tracer_record" ( ROWKEY varchar primary key, "tracer" ."elapsed" INTEGER, "tracer" ."endTime" varchar, "tracer" ."nodeIndexNo" varchar, "tracer" ."startTime" varchar, "tracer" ."tracerId" varchar, "tracer" ."tracerName" varchar, "tracer" ."tracerType" varchar, "tracer" ."url" varchar );

3. 查看hbase User 表结构 默认列族是 0, rowkey 默认对应主键 primary key

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 hbase(main):003:0* describe 'USER' Table USER is ENABLED USER, { TABLE_ATTRIBUTES => { coprocessor$1 => '|org.apache.phoenix.coprocessor.ScanRegionObserver|805306366|' , coprocessor$2 => '|org.apache.phoenix.coprocessor.UngroupedAggregateRegionObserver|805306366|' , coprocessor$3 => '|org.apache.phoenix.coprocessor.GroupedAggregateRegionObserver|805306366|' , coprocessor$4 => '|org.apache.phoenix.coprocessor.ServerCachingEndpointImpl|805306366|' , coprocessor$5 => '|org.apache.phoenix.hbase.index.Indexer|805306366|index.builder=org.apache.phoenix.index.PhoenixIndexBuilder,org.apache.hadoop.hbase.index.codec.class=org.apache.phoenix.index.PhoenixIndexCodec' } COLUMN FAMILIES DESCRIPTION {NAME => '0' , DATA_BLOCK_ENCODING => 'FAST_DIFF' , BLOOMFILTER => 'ROW' , REPLICATION_SCOPE => '0' , VERSIONS => '1' , COMPRESSION => 'NONE' , MIN_VERSIONS => '0' , TTL => 'FOREVER' , KEEP_DELETED_CELLS => 'FALSE' , BLOCKSIZE => '65536' , IN_MEMORY => 'false' , BLOCKCACHE => 'true' } 1 row(s) in 0.2340 seconds

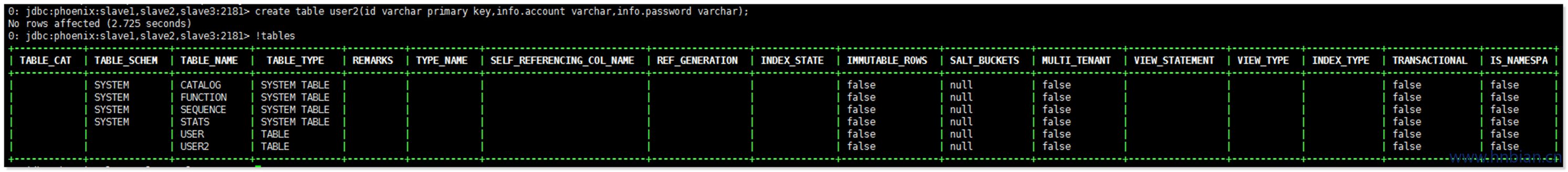

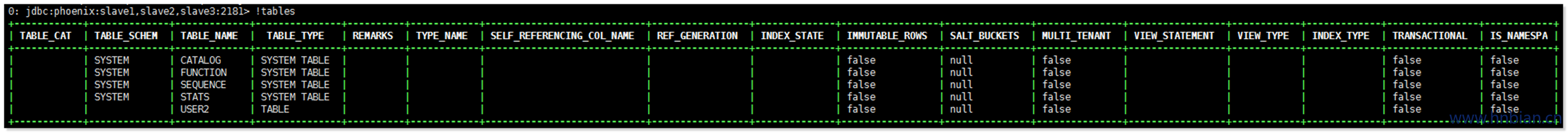

4. 创建表 指定列族 1 2 3 4 5 create table user2( id varchar primary key , info.account varchar , info.password varchar );

查看user2表结构 1 2 3 4 5 6 7 8 9 10 11 12 13 hbase(main):004:0> describe 'USER2' Table USER2 is ENABLED USER2, {TABLE_ATTRIBUTES => { coprocessor$1 => '|org.apache.phoenix.coprocessor.ScanRegionObserver|805306366|' , coprocessor$2 => '|org.apache.phoenix.coprocessor.UngroupedAggregateRegionObserver|805306366|' , coprocessor$3 => '|org.apache.phoenix.coprocessor.GroupedAggregateRegionObserver|805306366|' , coprocessor$4 => '|org.apache.phoenix.coprocessor.ServerCachingEndpointImpl|805306366|' , coprocessor$5 => '|org.apache.phoenix.hbase.index.Indexer|805306366|index.builder=org.apache.phoenix.index.PhoenixIndexBuilder,org.apache.hadoop.hbase.index.codec.class=org.apache.phoenix.index.PhoenixIndexCodec' } COLUMN FAMILIES DESCRIPTION {NAME => 'INFO' , DATA_BLOCK_ENCODING => 'FAST_DIFF' , BLOOMFILTER => 'ROW' , REPLICATION_SCOPE => '0' , VERSIONS => '1' , COMPRESSION => 'NONE' , MIN_VERSIONS => '0' , TTL => 'FOREVER' , KEEP_DELETED_CELLS => 'FALSE' , BLOCKSIZE => '65536' , IN_MEMORY => 'false' , BLOCKCACHE => 'true' } 1 row(s) in 0.0650 seconds

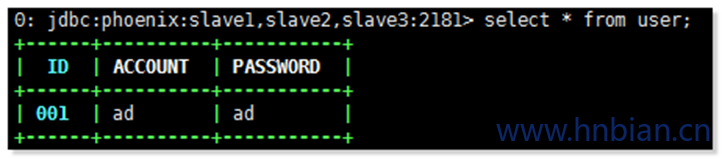

插入数据 1 2 # 插入数据关键字 upsert upsert into user (id,account,password) values ('001' ,'ad' ,'ad' );

查询数据

建表并插入准备好的数据 先创建 sql 文件 WEB_STAT.sql

1 2 3 4 5 6 7 8 9 10 CREATE TABLE IF NOT EXISTS WEB_STAT ( HOST CHAR (2 ) NOT NULL , DOMAIN VARCHAR NOT NULL , FEATURE VARCHAR NOT NULL , DATE DATE NOT NULL , USAGE.CORE BIGINT , USAGE.DB BIGINT , STATS.ACTIVE_VISITOR INTEGER CONSTRAINT PK PRIMARY KEY (HOST, DOMAIN, FEATURE, DATE ) );

准备数据文件WEB_STAT.csv

1 2 3 4 NA,Salesforce.com,Login,2013-01-01 01:01:01,35,42,10 EU,Salesforce.com,Reports,2013-01-02 12:02:01,25,11,2 EU,Salesforce.com,Reports,2013-01-02 14:32:01,125,131,42 NA,Apple.com,Login,2013-01-01 01:01:01,35,22,40

1 2 3 4 cd /opt/hnbian/phoenix-4.8.0/bin ./psql.py slave1,slave2,slave3:2181 ../examples/WEB_STAT.sql ../examples/WEB_STAT.csv

更新数据 更新数据语法与插入相同

upsert into user(id,account,password) values(‘001’,’ad’,’admin’);

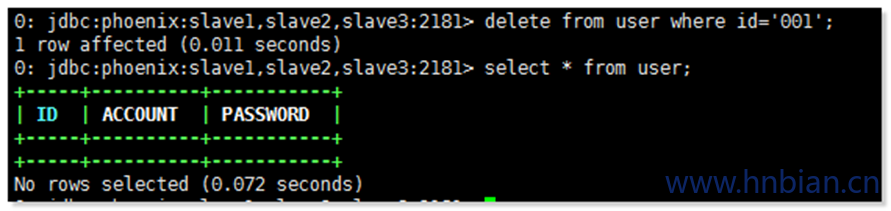

删除数据 Delete from user where id=’001’

删除表 Drop table user;

JDBC 操作 hbase 首先建立maven项目 1 2 3 4 5 6 7 <dependencies > <dependency > <groupId > org.apache.phoenix</groupId > <artifactId > phoenix-core</artifactId > <version > 4.2.2</version > </dependency > </dependencies >

将HBase集群的hbase-site.xml,hdfs-size.xml配置文件添加到项目的resources目录下。为了查看日志输出配置了一个简单的log4j.properties,也一并放到resources目录下。 log4j.properties 内容如下:

1 2 3 4 5 6 log4j.rootLogger=WARN, A1 log4j.appender.A1=org.apache.log4j.ConsoleAppender log4j.appender.A1.layout=org.apache.log4j.PatternLayout log4j.appender.A1.layout.ConversionPattern=%-4r [%t] %-5p %c %x - %m%n

获得链接 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 public Connection getConn () { try { Class.forName("org.apache.phoenix.jdbc.PhoenixDriver" ); return DriverManager.getConnection("jdbc:phoenix:slave1,slave2,slave3:2181" ); } catch (Exception e) { e.printStackTrace(); return null ; } }

新建表 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 public void create () { Connection conn = null ; try { conn = getConnection(); if (conn == null ) { System.out.println("conn is null..." ); return ; } ResultSet rs = conn.getMetaData().getTables(null , null , "USER" , null ); if (rs.next()) { System.out.println("table user is exist..." ); return ; } String sql = "CREATE TABLE user (id varchar PRIMARY KEY,INFO.account varchar ,INFO.passwd varchar)" ; PreparedStatement ps = conn.prepareStatement(sql); ps.execute(); System.out.println("create success..." ); } catch (Exception e) { e.printStackTrace(); } finally { if (conn != null ) { try { conn.close(); } catch (Exception e) { e.printStackTrace(); } } } }

插入数据(修改数据与插入一致) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 public void add () { Connection conn = null ; try { conn = getConnection(); if (conn == null ) { System.out.println("conn is null..." ); return ; } String sql = "upsert into user(id, INFO.account, INFO.passwd) values('001', 'admin', 'admin')" ; PreparedStatement ps = conn.prepareStatement(sql); String msg = ps.executeUpdate() > 0 ? "insert success..." : "insert fail..." ; conn.commit(); System.out.println(msg); } catch (Exception e) { e.printStackTrace(); } finally { if (conn != null ) { try { conn.close(); } catch (Exception e) { e.printStackTrace(); } } } }

查询数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 public void select () { Connection conn = null ; try { conn = getConnection(); if (conn == null ) { System.out.println("conn is null..." ); return ; } String sql = "select * from user" ; PreparedStatement ps = conn.prepareStatement(sql); ResultSet rs = ps.executeQuery(); System.out.println("id" + "\t" + "account" + "\t" + "passwd" ); System.out.println("======================" ); if (rs != null ) { while (rs.next()) { System.out.print(rs.getString("id" ) + "\t" ); System.out.print(rs.getString("account" ) + "\t" ); System.out.println(rs.getString("passwd" )); } } } catch (Exception e) { e.printStackTrace(); } finally { if (conn != null ) { try { conn.close(); } catch (Exception e) { e.printStackTrace(); } } } }

删除数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 public void deleteData () { Connection conn = null ; try { conn = getConnection(); if (conn == null ) { System.out.println("conn is null..." ); return ; } String sql = "delete from user where id='001'" ; PreparedStatement ps = conn.prepareStatement(sql); String msg = ps.executeUpdate() > 0 ? "delete success..." : "delete fail..." ; conn.commit(); System.out.println(msg); } catch (Exception e) { e.printStackTrace(); } finally { if (conn != null ) { try { conn.close(); } catch (Exception e) { e.printStackTrace(); } } } }

删除表 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 public void deleteTable () { Connection conn = null ; try { conn = getConnection(); if (conn == null ) { System.out.println("conn is null..." ); return ; } String sql = "drop table user2" ; PreparedStatement ps = conn.prepareStatement(sql); ps.execute(); System.out.println("drop success..." ); } catch (Exception e) { e.printStackTrace(); } finally { if (conn != null ) { try { conn.close(); } catch (Exception e) { e.printStackTrace(); } } } }