1. 安装 git

# 检查是否安装 git

[root@node1 ~]# git --help

-bash: git: 未找到命令

# 如果没有安装 git 则需要先安装git

[root@node1 ~]# yum install git

已加载插件:fastestmirror

Repository base is listed more than once in the configuration

Determining fastest mirrors

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

......

软件包 git-1.8.3.1-23.el7_8.x86_64 已安装并且是最新版本

无须任何处理

您在 /var/spool/mail/root 中有新邮件

2. 设置 version 变量

VERSION=`hdp-select status hadoop-client | sed 's/hadoop-client - \([0-9]\.[0-9]\).*/\1/'`

[root@node1 opt]# echo $VERSION

3.13.下载ambari-flink-service服务

# 下载ambari-flink-service服务到 ambari-server 资源目录下

[root@node1 opt]# sudo git clone https://github.com/abajwa-hw/ambari-flink-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/FLINK

正克隆到 '/var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK'...

remote: Enumerating objects: 192, done.

remote: Total 192 (delta 0), reused 0 (delta 0), pack-reused 192

接收对象中: 100% (192/192), 2.08 MiB | 35.00 KiB/s, done.

处理 delta 中: 100% (89/89), done.

[root@node1 opt]# cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/

[root@node1 FLINK]# ll

总用量 20

drwxr-xr-x 2 root root 58 2月 23 16:16 configuration

-rw-r--r-- 1 root root 223 2月 23 16:16 kerberos.json

-rw-r--r-- 1 root root 1777 2月 23 16:16 metainfo.xml

drwxr-xr-x 3 root root 21 2月 23 16:16 package

-rwxr-xr-x 1 root root 8114 2月 23 16:16 README.md

-rw-r--r-- 1 root root 125 2月 23 16:16 role_command_order.json

drwxr-xr-x 2 root root 236 2月 23 16:16 screenshots4. 修改配置文件

- 编辑 metainfo.xml 将安装的版本修改为 1.10.3

[root@node1 FLINK]# vim metainfo.xml

<name>FLINK</name>

<displayName>Flink</displayName>

<comment>Apache Flink is a streaming ...</comment>

<version>1.10.3</version>- 配置 Flink on yarn 故障转移方式

<property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value>

</property><property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.RequestHedgingRMFailoverProxyProvider</value>

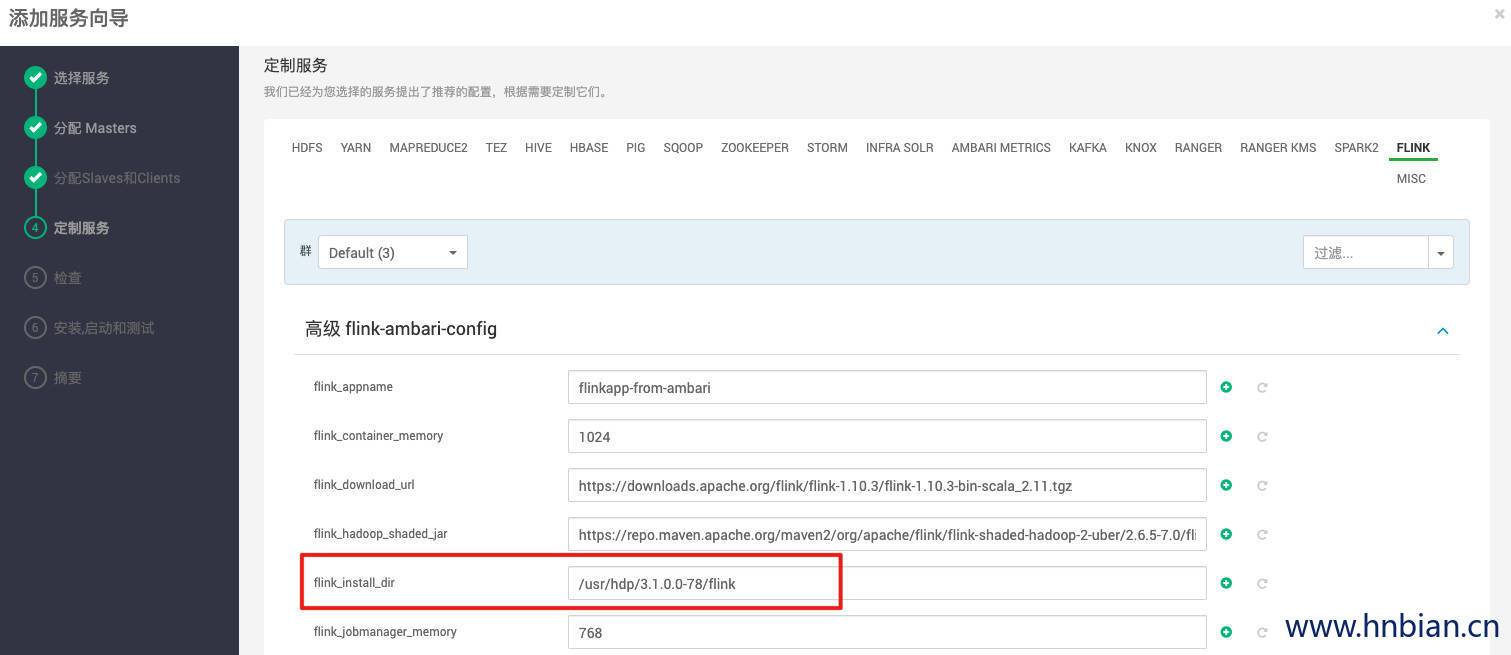

</property>- 编辑configuration/flink-ambari-config.xml修改下载地址

[root@node1 FLINK]# vim configuration/flink-ambari-config.xml

<property>

<name>flink_download_url</name>

<!--<value>http://www.us.apache.org/dist/flink/flink-1.9.1/flink-1.9.1-bin-scala_2.11.tgz</value>-->

<value>https://downloads.apache.org/flink/flink-1.10.3/flink-1.10.3-bin-scala_2.11.tgz</value>

<description>Snapshot download location. Downloaded when setup_prebuilt is true</description>

</property>5.创建 Flink 用户组与用户

# 添加用户组

[root@node1 FLINK]# groupadd flink

# 添加用户

[root@node1 FLINK]# useradd -d /home/flink -g flink flink6. 重启 ambari-server 并检查可用服务列表

[root@node1 ~]# ambari-server restart

Using python /usr/bin/python

Restarting ambari-server

Waiting for server stop...

Ambari Server stopped

Ambari Server running with administrator privileges.

Organizing resource files at /var/lib/ambari-server/resources...

Ambari database consistency check started...

Server PID at: /var/run/ambari-server/ambari-server.pid

Server out at: /var/log/ambari-server/ambari-server.out

Server log at: /var/log/ambari-server/ambari-server.log

Waiting for server start.............................................

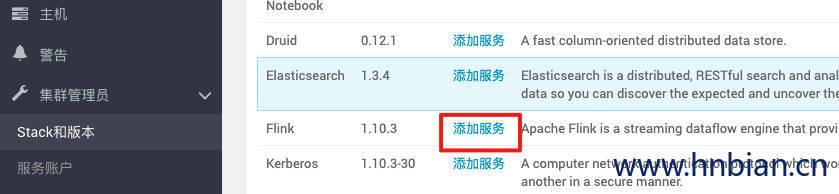

Server started listening on 8080- 查看安装服务中是否有 Flink

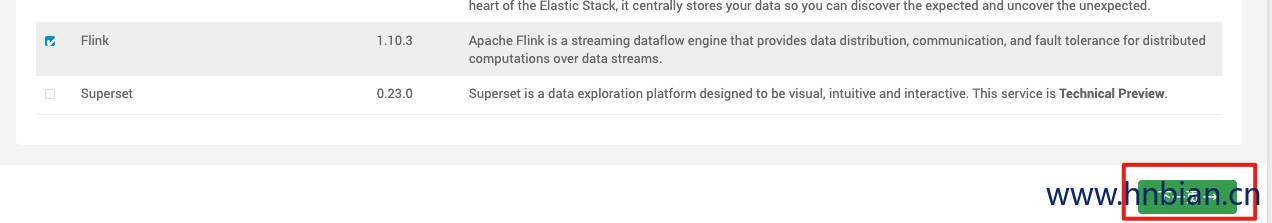

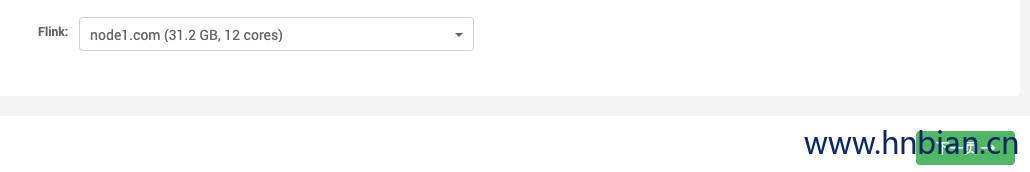

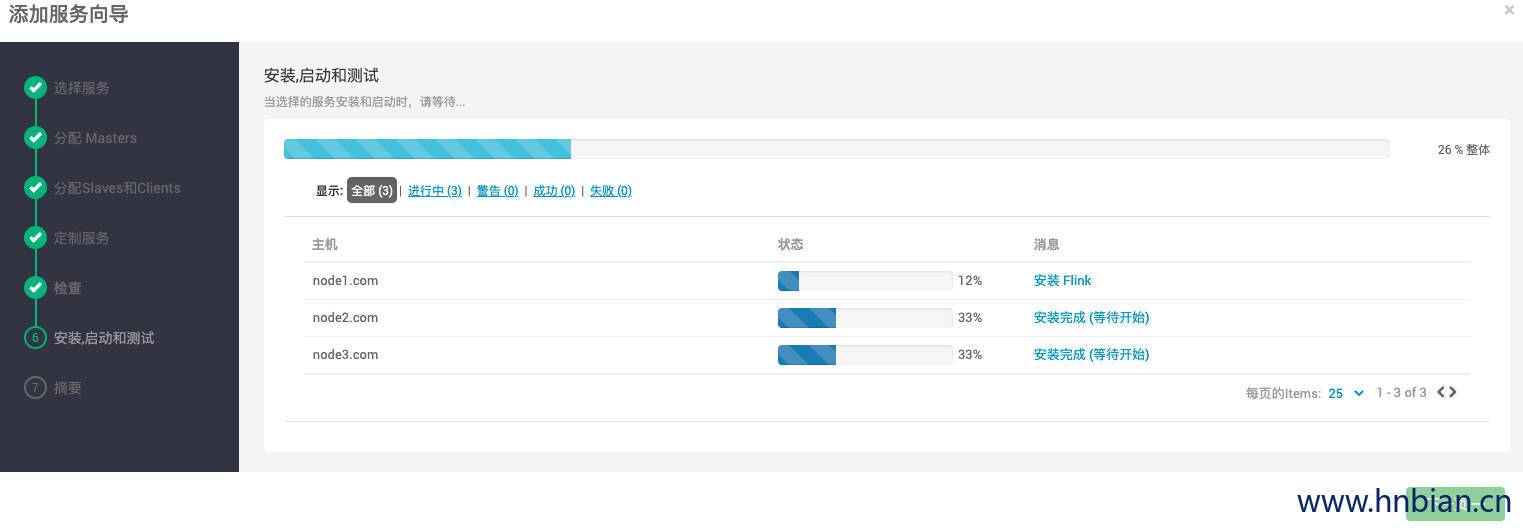

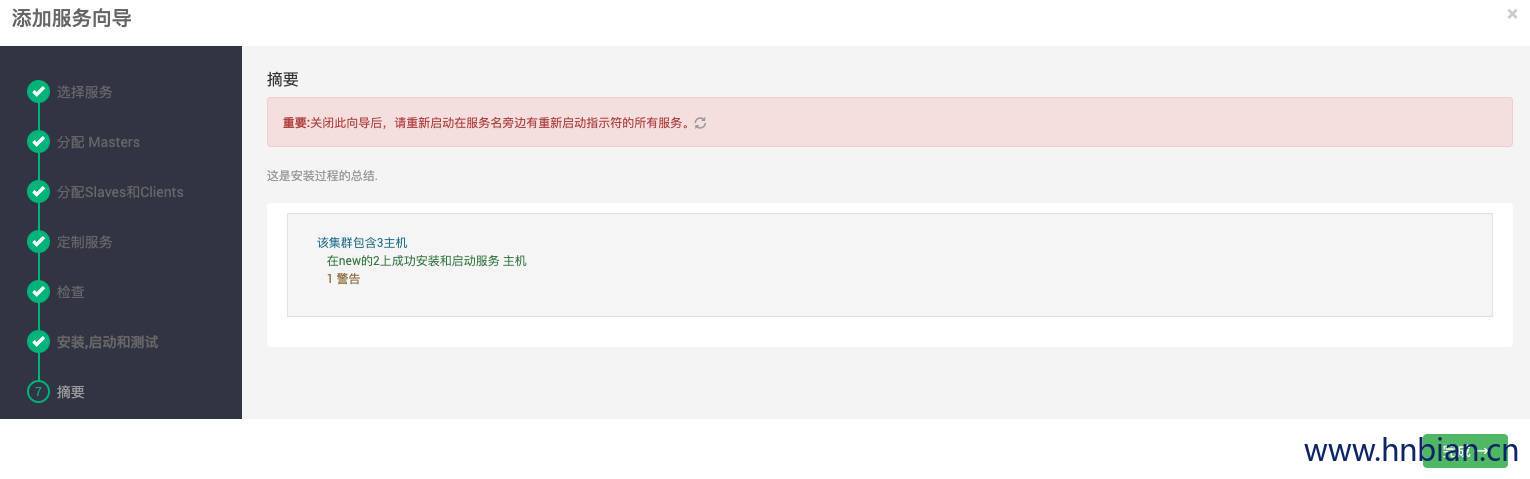

7. 安装 flink

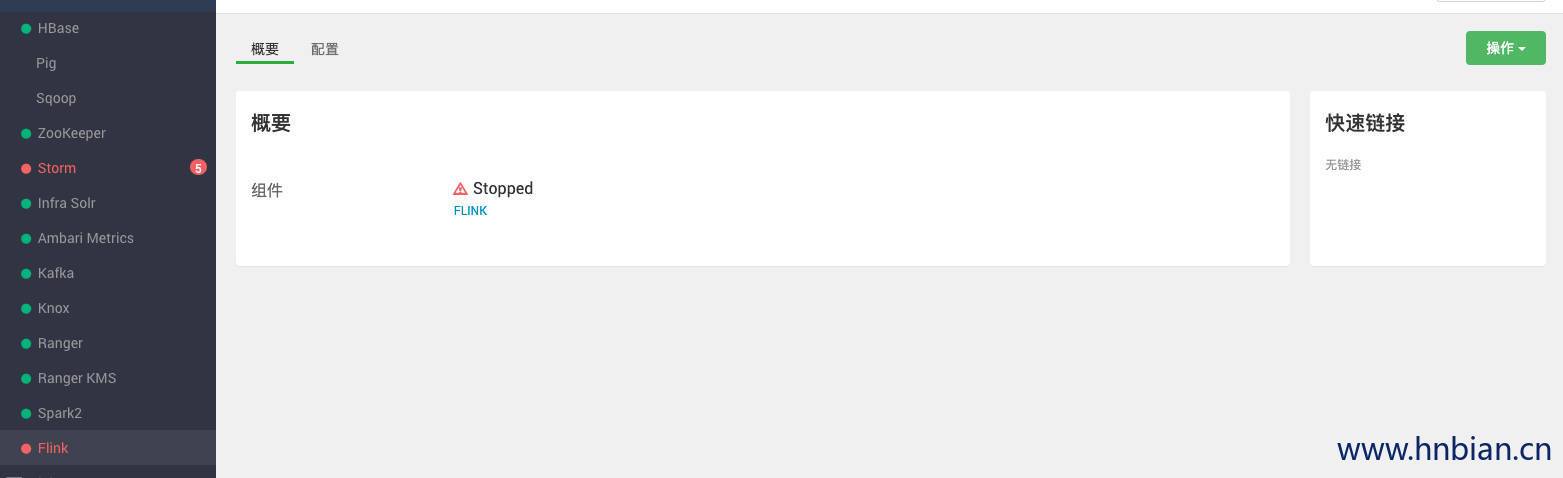

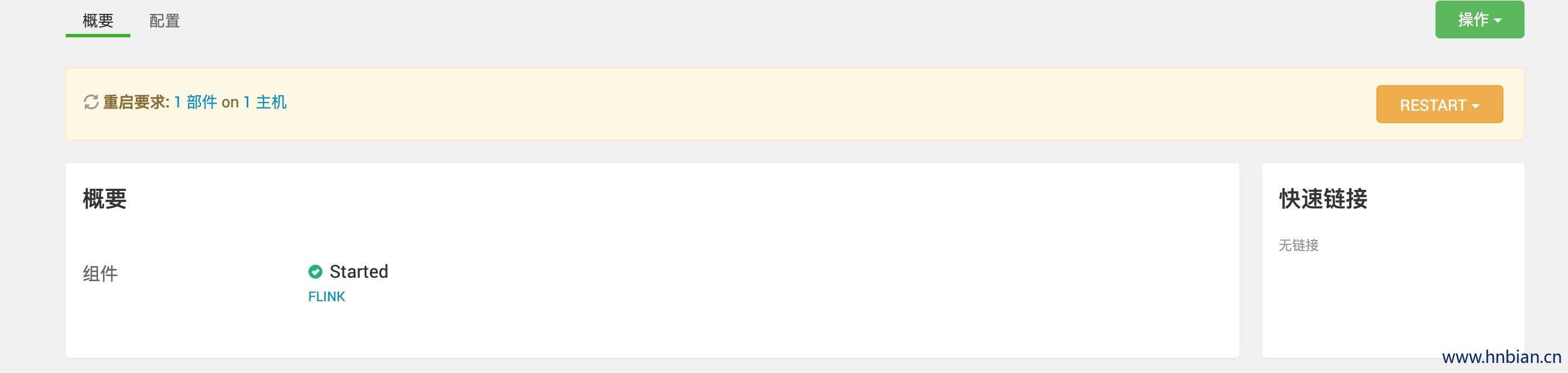

8. 启动 flink 服务

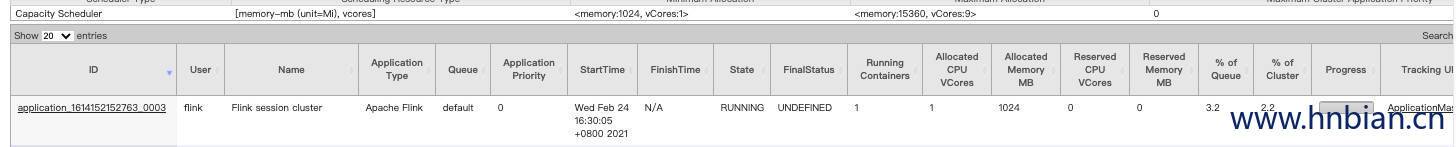

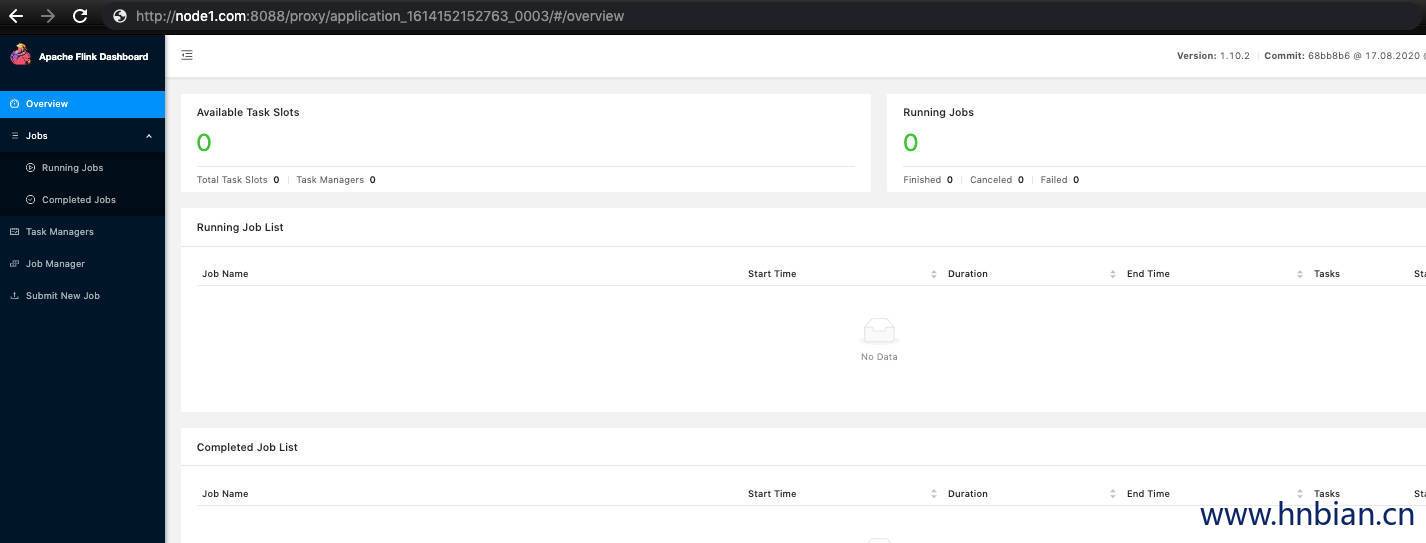

9. 测试 flink

- 提交任务

[hdfs@node1 flink-1.10.2]$ bin/flink run ./examples/batch/WordCount.jar # 提交 flink 自带的测试任务

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR was set.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/flink-1.10.2/lib/slf4j-log4j12-

...

# 执行结果

(a,5)

(action,1)

(after,1)

(against,1)

(all,2)

(and,12)

(arms,1)

(arrows,1)

(awry,1)

(ay,1)

(bare,1)

(be,4)

(bear,3)

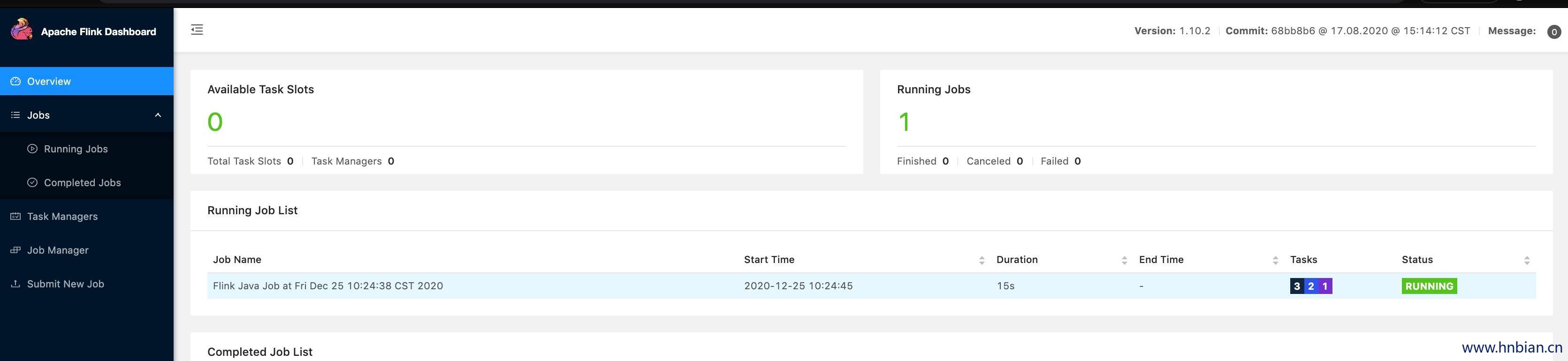

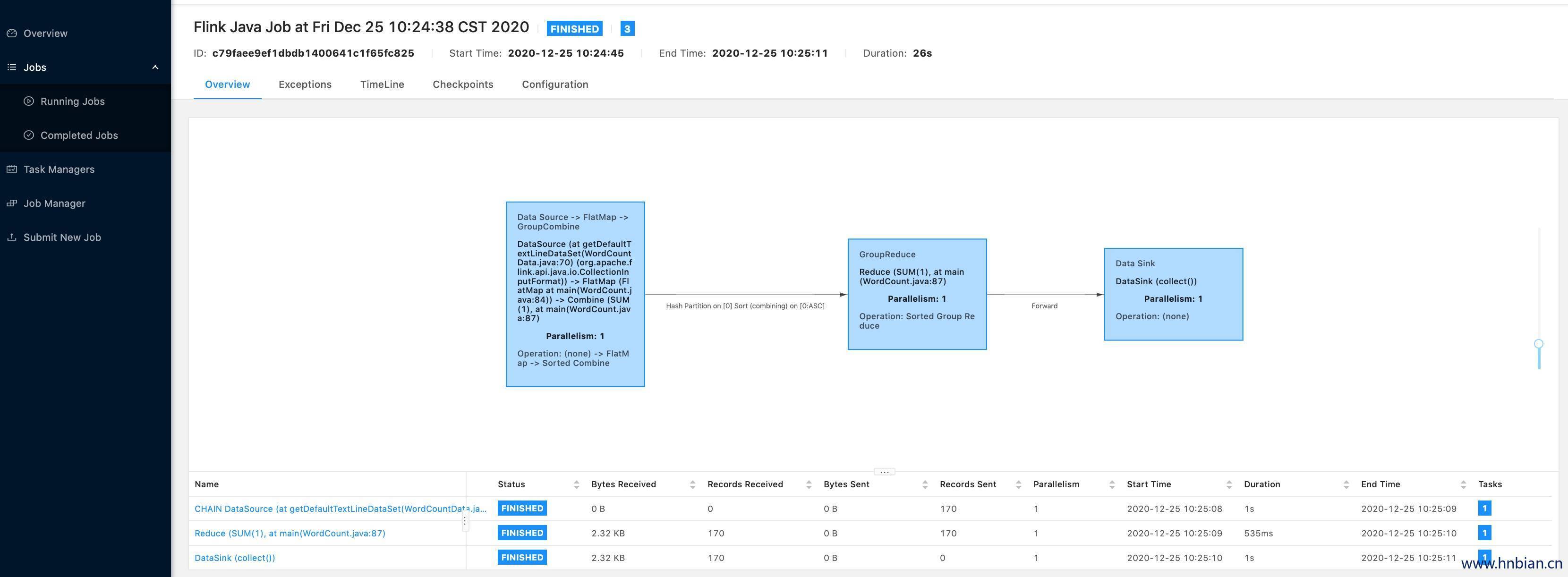

...- 查看 Flink webUI 中的任务情况

10.遇到的问题

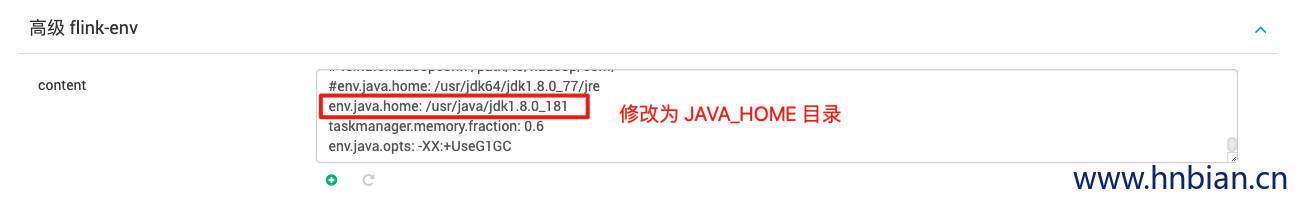

- 启动时 java: 未找到命令

resource_management.core.exceptions.ExecutionFailed: Execution of 'export ...9.3.22.v20171030.jar:/usr/hdp/3.1.0.0-78/tez/lib/jsr305-3.0.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/metrics-core-3.1.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/servlet-api-2.5.jar:/usr/hdp/3.1.0.0-78/tez/lib/slf4j-api-1.7.10.jar:/usr/hdp/3.1.0.0-78/tez/lib/tez.tar.gz; /usr/hdp/3.1.0.0-78/flink/bin/yarn-session.sh -n 1 -s 1 -jm 768 -tm

-qu default -nm flinkapp-from-ambari -d >> /var/log/flink/flink-setup.log' returned 127. /usr/hdp/3.1.0.0-78/flink/bin/yarn-session.sh:行37: java: 未找到命令解决办法:

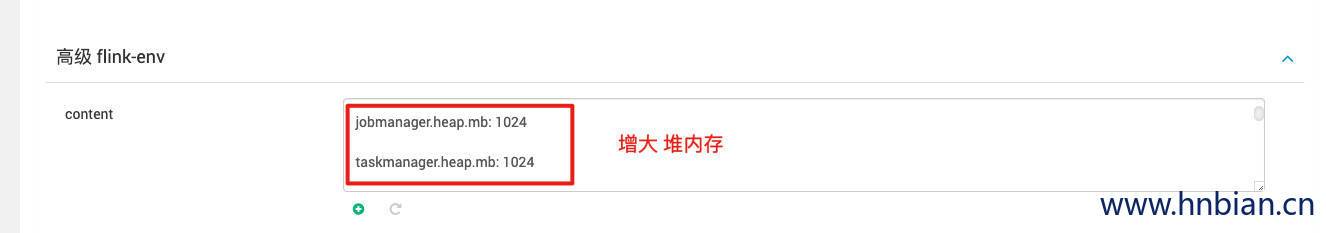

- IllegalConfigurationException:

org.apache.flink.configuration.IllegalConfigurationException: Sum of configured Framework Heap Memory (128.000mb (134217728 bytes)), Framework Off-Heap Memory (128.000mb (134217728 bytes)), Task Off-Heap Memory (0 bytes), Managed Memory (25.600mb (26843546 bytes)) and Network Memory (64.000mb (67108864 bytes)) exceed configured Total Flink Memory (64.000mb (67108864 bytes)).

at org.apache.flink.runtime.clusterframework.TaskExecutorProcessUtils.deriveInternalMemoryFromTotalFlinkMemory(TaskExecutorProcessUtils.java:320)

at org.apache.flink.runtime.clusterframework.TaskExecutorProcessUtils.deriveProcessSpecWithTotalProcessMemory(TaskExecutorProcessUtils.java:248)

at org.apache.flink.runtime.clusterframework.TaskExecutorProcessUtils.processSpecFromConfig(TaskExecutorProcessUtils.java:147)

at org.apache.flink.client.deployment.AbstractContainerizedClusterClientFactory.getClusterSpecification(AbstractContainerizedClusterClientFactory.java:44)

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.run(FlinkYarnSessionCli.java:547)

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.lambda$main$5(FlinkYarnSessionCli.java:786)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:786)解决办法:

- 页面显示异常

从页面启动 flink之后,Flink-yarn-session 启动成功,但是 ambari 进度不到 100%

- 页面没有 Flink web ui 链接地址